Safety in a digital age: old and new problems

Algorithms, Machine learning, Big Data & Artificial Intelligence

The issue

Algorithms, machine learning, big data and artificial intelligence (AI) are key words of a current transformation of societies. Following a first wave of internet development coupled with the spread of personal computers in the 1990s, the 2010s brought a second level of connectedness through smart phones and tablets, generating a massive amount of data. It is this new environment made of big data, partly generated by a growing internet of things, which supported the proliferation of algorithms, machine learning and a new generation of AI (NSCT, 2016a, 2016b). Without falling into the trap of a technological determinism, this transformation through digitalisation clearly affects every sphere of social life including culture, economy, politics, art, education, health, family, identity and social relations.

One can easily find one or several examples in these different spheres through which our daily life is affected. Social media (e.g. Facebook, Twitter, LinkedIn, ResearchGate), search engines (e.g. Google, Bing, Qwant) and websites in so many various areas including online shopping (e.g. Amazon, Fnac), music (e.g. Spotify, Deezer), news (e.g. The New York Times, Le Monde, the Financial Times), videos, series, cinema and programs (e.g. Netflix, Youtube, DailyMotion) or activism (e.g. SumOfUs) are only a few examples. An exhaustive list is simply impossible, but the rise of internet giants (GAFAM/N for Google, Apple, Facebook, Amazon, Netflix, Microsoft) raised several concerns ranging from business monopoly through fiscal to data privacy issues which reveals increasing concern by civil societies and states.

A digital society slowly emerges, somewhere between reality, futuristic and dystopian visions of the next decades to come. It has become indeed very clear for sociologists that we now empirically live in a mediated constructed reality (e.g. Couldry, Hepp, 2017, Cardon, 2019), and, while some wonder whether these changes should be characterised as an evolution or a revolution (e.g. Rieffel, 2013), others now warn of a re-engineering of humanity because of the extent of the material, cognitive and social modifications of our environment (e.g. Frischmann, Sellinger, 2018).

Narrowing this panoramic view, implications for work, organisations, businesses and regulations in general are clearly quite profound. They seem obvious in some cases but remain also still partly uncertain at the same time in other areas. For instance, how much of work as we know it will be changed in the future? Estimates range from 9% to 47% of current jobs that could disappear within the next few decades because of AI. Whatever the extent of this replacement or mutation, one can imagine that combining human jobs with AI, or simply relieving people from current tasks, would change the nature of work as well as the configuration and management of organisations. In addition, comes a growing cybersecurity challenge.

In the platform, digital and gig economy (e.g. Amazon, Uber, Deliveroo), algorithmic management has for instance been coined to characterise the new workers’ working conditions (Lee et al, 2015). Business are threatened in their market positions by innovative ways of interacting with their customers through social media and use of data, by new ways of organising work processes or by new start-up competitors redefining the nature of their activities. Business leaders must adapt to this digitalisation of markets, to potential disruptions based on big data, machine learning and AI. They must strategise to keep up with a challenging and rapidly changing environment (Deshayes, 2019).

The same applies to regulation. Because of the now pervasive use of algorithms, machine learning, big data and AI across society, notions of algorithmic governmentality (Rouvroy, Berns, 2013) or algorithmic regulation (Yeung, 2017) have been developed to identify and conceptualise some of the challenges faced by regulators. Cases of algorithmic biases, algorithmic law breaking, algorithmic propaganda, algorithmic manipulation but also algorithmic unknowns have been experienced in a recent past, including the Cambridge Analytica/Facebook scandal during the last US election or the Diesel Gate triggered by Volkswagen’s software fraud (Andrews, 2017). This creates new challenges for the control of algorithms’ proliferation, and some have already suggested, in the US, a National Algorithm Safety Board (e.g. Macaulay, 2017).

This last point connects digitalisation with safety. How can high-risk and safety-critical systems be affected by this development, in terms of their activities, their organisation, management and regulation? What can be the safety-related impacts of the proliferation of big data, algorithmic influence and cybersecurity challenges in healthcare (e.g. hospitals, drugs), transport (e.g. aviation, railway, road), energy production/distribution (e.g. nuclear power plants, refineries, dams, pipelines, grids) or production of goods (e.g. chemicals, food) and also services (e.g. finance, electronic communication)? Understanding the ways these systems operate in this new digital context has become a core issue. It is the role of research to offer lenses through which one can grasp the way such systems evolve, and its implication for safety.

There are many areas concerned in which research traditions in the safety field can contribute to question, to anticipate and to prevent potential incidents but also to support, to foster and to improve safety performance within a digital context (Almklov, Antonsen, Størkersen & Roe 2018, Le Coze, 2020). For instance, tasks so far performed by humans are potentially redesigned through a higher level of automation by AI, whether in the case of autonomous vehicles or human machine teaming (NSTC, 2016b). What about human error, human-machine interface, reliability and learning in this new context (Smith, Hoffmann, 2017)? What are the consequences of pushing the boundaries of allocated decision-making towards machines? What are the implications for the distribution of power and decision-making authority of using new sources of information, new tools for information processing, and new ways of “pre-programming” actions and decisions through algorithms?

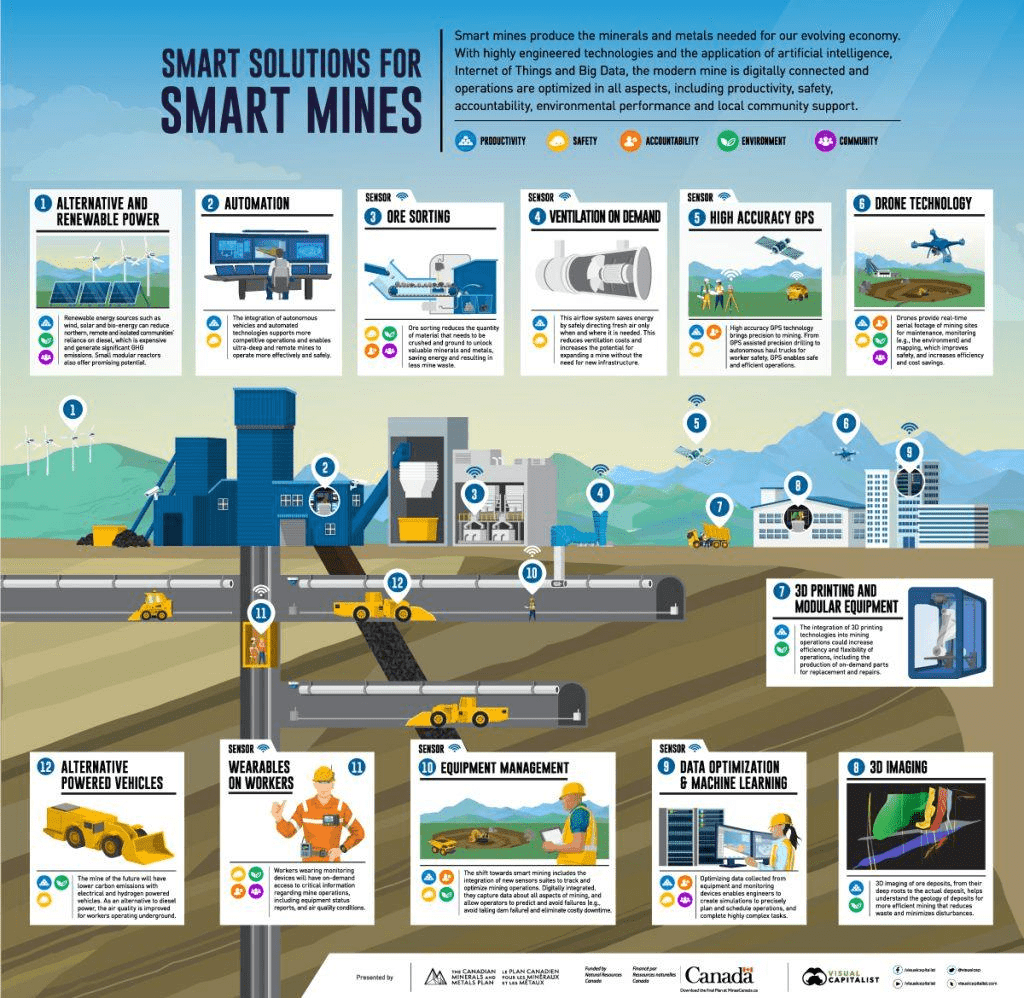

The same applies to the organisational or regulatory angles of analysis of safety critical systems such as developed by the high-reliability organisation (Ramanujam, Roberts, 2018) and risk regulation regimes (Drahos, 2017) research traditions. What if the prospect of smart plants, aircraft or mines was realised as illustrated by the visual below (figure 1)? What happens when protective safety equipment, vehicles, individuals’ behaviours, automation of work schedule are interlinked through data and algorithmic management delegating to machines a new chunk of what used to be human decision-making? What are the implications for risk assessment, learning from experience or compliance to rules and regulation, including inspection by authorities?

But quite importantly, what of this is realistic and unrealistic? What can be anticipated without empirical studies but only projections into the future? Which of these problems are new ones and which of them are old? The NeTWork workshop in September 2021 is an opportunity to map some of the pressing issues associated with digitalisation based on algorithms, machine learning, big data and artificial intelligence for the safe performance of high-risk systems and safety critical organisations.

References

Almklov, Antonsen, Størkersen & Roe 2018. Safer societies. Safety Science. vol. 110 (Part C)

Andrews, L. 2017. Algorithms, governance and regulation: beyond ‘the necessary hashtags’ retrieved in October 2019 at https://www.kcl.ac.uk/law/research/centres/telos/assets/DP85-Algorithmic-Regulation-Sep-2017.pdf

Cardon, D. 2019. Culture numérique. Paris, Presses de Science Po.

Couldry, n., Hepp, A. 2017. The Mediated Construction of Reality. Cambridge, UK: Polity Press

Deshayes, C. 2019. La transformation numérique et les patrons. Les dirigeants à la manoeuvre. Paris, Presses des Mines.

Drahos, P (ed.). (2017). Regulatory theory: foundations and applications, Acton: ANU Press,

Frischmann, B., Sellinger, E. 2018. Re-Engineering Humanity. Cambridge, Cambridge University Press.

Le Coze, JC (ed). 2020. Safety Science Research. Evolution, challenges and new directions. Boca Raton, FL: CRC Press, Taylor & Francis group.

Lee, M, K., Kusbit, D., Metsky, E., Dabbish, L. 2015. Working with machines: The impact of algorithmic, data-driven management on human workers. Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, pages 1603–1612. DOI: 10.1145/2702123.2702548.

Macaulay, T. (2017) ‘Pioneering computer scientist calls for National Algorithm Safety Board’, Techworld, 31 May. online

NSTC. 2016a. Big data: a report on algorithmic systems, opportunity, and civil rights. Executive Office of the president.

NSTC. 2016b. Preparing for the future of AI. Executive Office of the President

Ramanujam, R., Roberts KH, eds. (2018). Organizing for Reliability: A Guide for Research and Practice. Stanford, CA: Stanford University Press

Rieffel, R. 2014. Révolution numérique, revolution Culturelle? Paris, Gallimard.

Rouvroy, A., Berns, T. 2013. Gouvernementalité algorithmique et perspectives d’émancipation. Le disparate comme condition d’individuation par la relation? Réseaux. 2013/1. 177. 163-196.

Yeung, K. 2017. Algorithmic regulation. A critical interrogation. Regulation & Governance. 12. 505-523.

Workshop organizers

- Jean-Christophe Le Coze, Ineris, France

- Stian Antonsen, Sintef, Norway

Outputs

The position papers presented during the workshop were improved by authors based on discussion at Royaumont, then published as an open access volume in the SpringerBriefs in Safety Management series. The book can be downloaded for free in PDF and ePub formats.

| Title | Safety in the Digital Age: Sociotechnical Perspectives on Algorithms and Machine Learning |

| Editors | Jean-Christophe Le Coze, Stian Antonsen |

| Publisher | Springer |

| Year | 2023 |

| ISBN | 978-3031326332 |